Big Data & AI : the symphony of datas and measures

Who has never been moved by a song or other piece of music, an orchestral masterpiece, perhaps, or a tuneful number sung on the radio? What is the magic that causes that thrill that a melody can give us?

In order for music to make the body resonate in that special way, every note must be in its place, produced with accuracy and precision by the instrument, whether string, brass, percussion, or voice… It’s only when all the ‘ingredients’ are there in the right measure that anyone can say “This music is good”

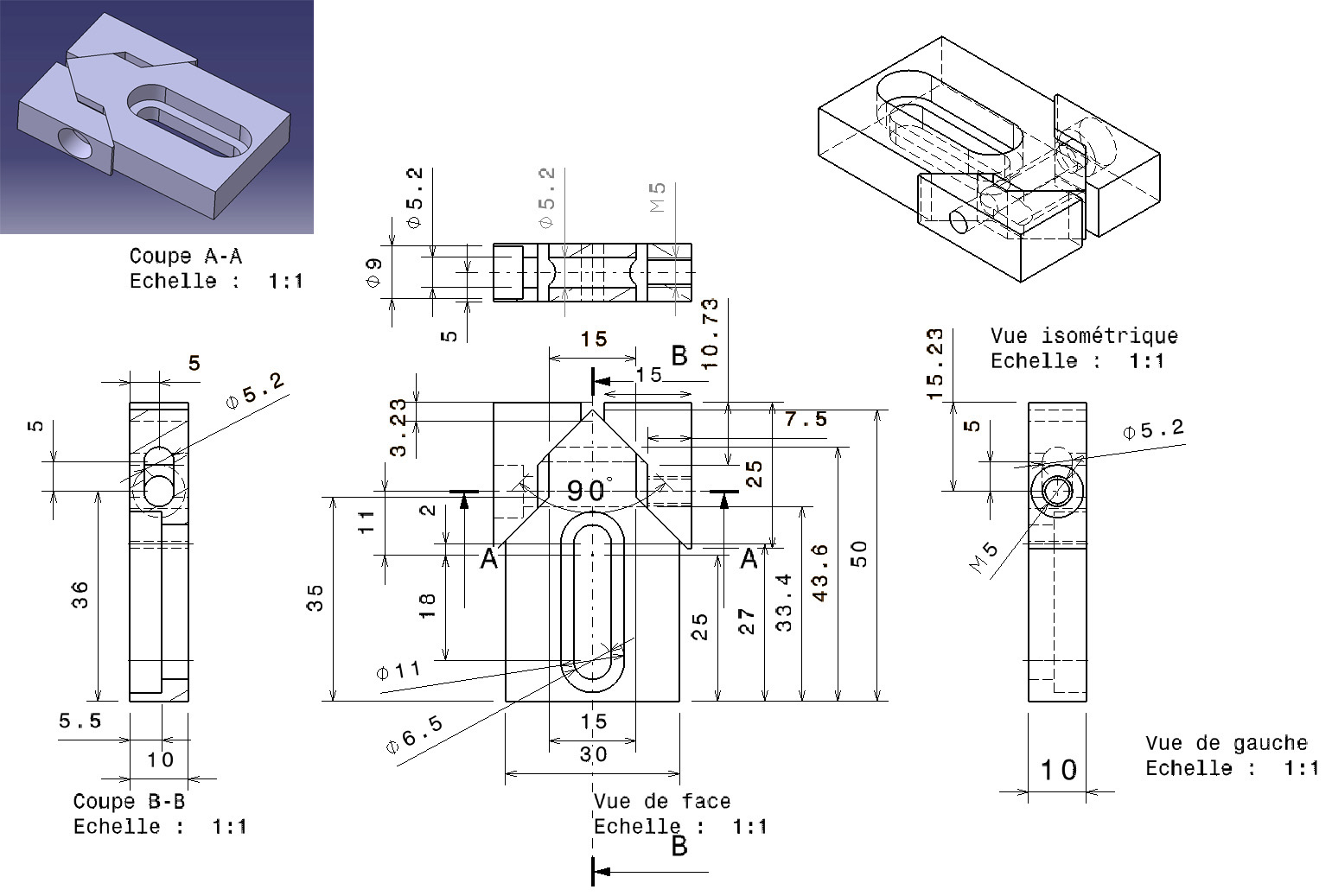

At first sight, the two images above don’t have anything in common. The first, a music score, tells musicians which notes to play and when to play them. The second provides the tolerances that must be respected for an entity to conform to customer requirements.

So what is the link? Well in both cases if, and only if, the indications are followed strictly can, on the one hand the customer, on the other the music lover, be satisfied…

The job of a conductor is to make sure all the instruments are played together, at the right tempo, in order to produce the music the listener wants to hear. In the factory, the production supervisor in fact serves the same purpose: to make sure that all the factors that influence production are simultaneously under control, thus ensuring that the final product will satisfy the customer.

It is easy to draw parallels between the ‘Orchestra’ and the ‘Industrial Process’:

- The score = The plan

- The instruments = The influence factors

- The melody = The entity produced

- The conductor = The production line supervisor

Thus far so good. However, there is one other essential component: The composer of the score.

In the industrial world today, the designer of the ‘plan’ is usually the R&D department, where the tendency is to continue along the lines of how things have been done to date. However, even the recent past is a world that did not possess the stupendous data storage, sharing and analysis capability that the 21st century offers. With these new tools, it is the very data that can write the score, almost by itself, informed by the highlights and the lowlights of the ‘music’ (the ‘entities’) produced previously.

As everyone knows, it’s not always easy to explain why a note stirs a particular emotion in a music lover. In the same way, it is not always easy to find the reason behind a (perhaps atypical) behaviour pattern in a manufacturing process.

However, by taking the trouble to observe the reactions of the ‘music lovers’ – the customers in industry – the new composers can produce ‘music that’ has the highest possible chance of giving satisfaction. Thus, by analysing the historic results of a procedure, the designers can optimize the probability of ‘conformity’ of the entities to be produced.

But below the surface, things can be quite complex. If it is quite legitimate to try to model phenomena using a mathematical equation of the type y (Result) = f(x1,x2, …xn) where xi are influential parameters (the orchestral instruments and the ‘measure’ of their contribution to the melody), the reality is often more complicated.

All models are wrong, but some are useful – George E. P. Box

The algorithms of today are not trying to discover analytical mathematical models (versus experience analysis). They try to describe, without necessarily understanding, how to harmonize the musicians so that ‘The music is good’: in other words, they make it possible to write the score in such a way as to guarantee that the entity will be compliant…

The data scientist is the composer of the score (i.e. of the control settings) for the manufacturing process in the company (of the future). This person has at his or her disposal the successes and failures of yesterday (the data) and the new analysis methods (algorithmic tools of Artificial Intelligence) that make it possible to write the most likely ‘recipe’ for obtaining conformity. The writing of the new score is not based on beliefs, as before, but on observed facts. And the algorithms available today are capable of detecting phenomena that are not necessarily intuitive, and in fact which can even be counter-intuitive. Indeed, this new capacity is the very basis of their effectiveness…

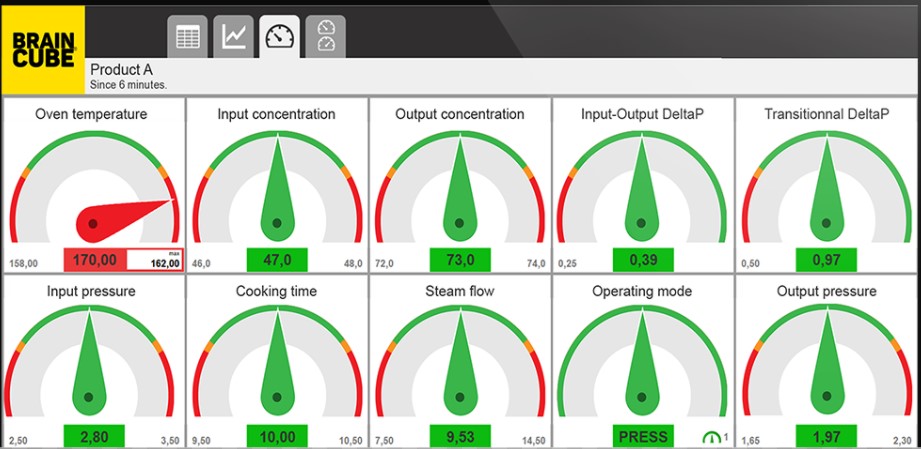

In industry, the ‘score’ generally takes the form of a dashboard giving the conformity ranges of the input parameters that will result in compliant entities. From thereon in, the job of the operator is simply to respect the ‘green zones’!

But for the music to be ‘good’, there is one other crucial factor… The musicians must play the score properly!

To pursue the analogy with the philharmonic orchestra, the industrial metrologist is, in the world of Big Data, not only the tuner of measurement instruments but also the guarantee that the musicians can play! The note ‘A’, the tuning reference for musicians, is the equivalent of the SI (International System of Units) of the IBWM (International Bureau of Weights and Measures). Finely tuned and concordant instruments do not make ‘good music’ if they are not properly played! That is the whole point: knowing how to play, playing ‘in tune’ and at the right tempo, all the time, so that the music is good…

‘Big Data’ is a technology that promises to write better scores. In order to produce results, it needs:

- a Composer = the Data Scientist

- a Conductor = the Production line supervisor

- Trained musicians who play in tune = Responsibility of the metrologist.

Metrologists no longer have any choice. They cannot limit themselves to tuning musicians’ instruments: they must actively participate in the production of the symphony that the music lovers are wanting to hear, as they are the ones responsible for the new score. If the information available for writing the score is not reliable, then that score will not be a good one! In which case it will be no surprise if the music lovers opt for a different orchestra, and go and listen to their music elsewhere!